Ethical AI: Responsibility, Governance, and Practical Implementation

Author: Viacheslav Gasiunas

1. Introduction

The rapid integration of AI into critical decision-making processes brings unparalleled efficiency and innovation, but it also raises urgent questions about accountability, fairness, and societal impact. Without a clear ethical framework, organizations risk undermining public trust and facing significant legal and reputational consequences.

In recent years, we have witnessed the rapid advancement of artificial intelligence (AI) technologies. From automating routine business tasks to making critical decisions in domains such as healthcare, finance, and transportation, AI has firmly integrated into our daily lives. However, alongside new opportunities arise serious risks: discrimination, personal data breaches, opaque algorithms, and unforeseen failures. This is why the topic of Ethical AI has become a priority not only for large corporations and government agencies but also for startups and small businesses.

In this report, we will:

- Define key concepts and distinguish between AI Ethics and Ethical AI.

- Analyze the current legal and regulatory framework, international standards, and internal requirements.

- Reveal the core principles of Ethical AI and practical approaches to their implementation.

- Describe organizational structures, roles, and processes that ensure robust AI governance.

- Provide an overview of the tools and methodologies for technical implementation.

- Assess the main challenges and risks.

- Offer detailed recommendations for deployment in different types of organizations.

- Conclude with findings and future perspectives.

Our goal is not just theoretical discussion but to create a concrete step-by-step guide adaptable to any company.

2. Key Concepts and Terminology

Before implementing ethical AI strategies, it is essential to establish a common language and understanding of foundational terms. Confusion between academic inquiry and practical application can lead to misaligned priorities and ineffective governance.

2.1 AI Ethics

- Domain: Primarily academic and philosophical.

- Objective: Study the moral, social, and legal aspects of designing and using AI.

- Example questions:

- Should a self-driving car sacrifice one person to save many?

- Does deploying facial recognition systems without public notice violate privacy rights?

2.2 Ethical AI

- Domain: Applied, business practice.

- Objective: Embed AI Ethics principles into specific products and processes.

- Components:

- Corporate policies and codes of conduct.

- Ethical risk assessment and monitoring processes.

- Technical frameworks and tools for bias control and explainability.

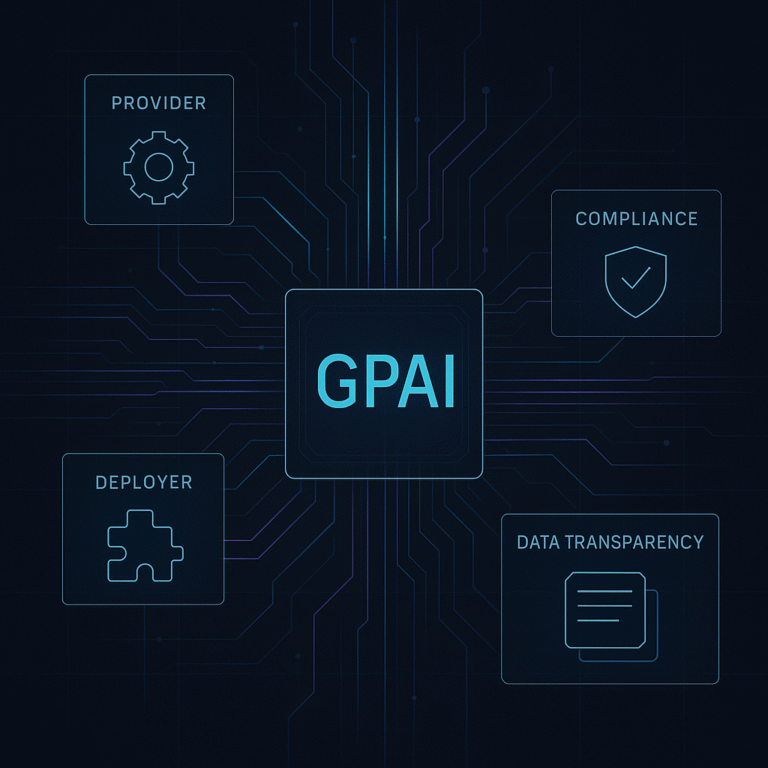

2.3 AI Governance

A system of roles (e.g., AI Ethics Officer, AI Compliance Specialist), policies, and procedures to manage the AI lifecycle. Includes committees, working groups, and decision-making mechanisms.

2.4 AI Compliance

Ensures adherence to legal requirements and standards: EU AI Act, GDPR, ISO/IEC 42001, and national laws in the U.S., China, U.K., etc.

3. Legal and Regulatory Framework and Standards

A robust legal and regulatory environment is the backbone of responsible AI adoption. As jurisdictions worldwide introduce new rules and guidelines, organizations must stay ahead to ensure compliance, mitigate risks, and maintain competitive advantage. This section reviews key laws, standards, and international recommendations that shape the Ethical AI landscape.

3.1 EU AI Act (2021)

- Classifies AI systems by risk level, from minimal to unacceptable.

- Prohibits social scoring and biometric surveillance in public spaces.

- Imposes mandatory transparency requirements on high- and critical-risk systems.

3.2 GDPR (2018)

- Data subject rights: access, rectification, erasure, and data portability.

- Mandates “Privacy by Design” and “Privacy by Default” for AI projects.

3.3 ISO/IEC 42001:2022

- Guidelines for establishing and maintaining an Ethical AI management system.

- Integrates with ISO 31000 (risk management) and ISO 9001 (quality management).

3.4 Additional Reference Documents

- OECD AI Principles on ethical AI.

- National AI Strategies (U.S., China, EU).

4. Core Principles of Ethical AI

Ethical AI is grounded in a set of universal principles designed to protect individuals and society from harm. These tenets guide every phase of model development and deployment, from data preparation to monitoring in production.

4.1 Fairness and Non-Discrimination

- Analyze and correct datasets by identifying and removing correlated attributes.

- Implement equality metrics such as Demographic Parity and Equal Opportunity.

- Case studies: correcting bias in loan approvals and recruitment algorithms.

4.2 Transparency and Explainability (Explainable AI)

- Document the data pipeline: data sources, processing steps, algorithms.

- Use SHAP, LIME, and Captum for local and global explanations.

- Produce clear reports for regulators and end-users, featuring plain-language summaries and visualizations.

4.3 Accountability and Responsibility

- Define clear roles: who is responsible for decision-making, implementation, and auditing.

- Establish escalation processes to alert teams when quality or safety metrics deviate.

- Conduct regular internal and external audits.

4.4 Safety and Reliability

- Test models against adversarial attacks using the Adversarial Robustness Toolbox (ART) and CleverHans.

- Monitor data drift and concept drift over time.

- Adopt DevSecOps practices for continuous secure integration of code.

4.5 Privacy and Data Protection

- Employ federated learning and differential privacy technologies (TensorFlow Privacy, Opacus).

- Enforce access controls and encryption at all data storage and transmission stages.

- Maintain audit logs and traceability systems.

4.6 Human-Centricity and Well-Being

- Conduct social impact assessments and user experience research.

- Implement user feedback mechanisms.

- Design inclusively to ensure accessibility for people with disabilities.

5. Organizational Structure for Ethical AI Management

Translating ethical principles into practice requires a well-defined governance framework. Without clear roles, processes, and oversight bodies, policies remain theoretical and fail to drive real change. This section describes the organizational structures and key responsibilities that ensure effective AI governance.

5.1 AI Ethics Committee

- Cross-functional body: IT, Legal, HR, Marketing representatives.

- Meets regularly to approve ethical guidelines and review high-risk use cases.

5.2 Key Roles

- AI Ethics Officer: Strategic planning and liaison with the board of directors.

- AI Governance Manager: Policy development, reporting, and compliance oversight.

- AI Compliance Specialist: Regulatory alignment and audit preparation.

- AI Risk & Impact Assessor: Risk analysis during design and post-deployment.

- Responsible AI QA Engineer: Model testing and automated checks.

5.3 Core Processes

- Ethical Risk Identification at project initiation.

- Impact and Privacy Risk Assessments (Data Protection Impact Assessment).

- Integration of Ethical Checkpoints in the software development lifecycle (SDLC).

- Monitoring and Reporting: track fairness, transparency, and safety metrics.

- Audits and Remediation: perform audits and apply corrective measures when deviations occur.

6. Practical Tools and Methodologies

Implementing Ethical AI principles at scale hinges on the right mix of tools and methods. From bias detection frameworks to explainability libraries and privacy toolkits, practical solutions enable teams to embed ethics directly into their workflows.

| Principle | Task | Tools & Practices |

|---|---|---|

| Fairness | Detecting and correcting bias | IBM AI Fairness 360, Microsoft Fairlearn, Google What-If Tool |

| Explainability | Generating decision explanations | SHAP, LIME, Captum |

| Security | Defense against adversarial attacks | Adversarial Robustness Toolbox, CleverHans |

| Privacy | Ensuring Privacy by Design | TensorFlow Privacy, Opacus, PySyft |

| Data Quality | Profiling and cleaning datasets | Great Expectations, Deequ |

7. Main Challenges and Risks

Even the best-intentioned AI governance programs can falter when faced with conflicting objectives, cultural variations, and rapid technological change. Anticipating and addressing these challenges early is critical to sustaining ethical practices over time.

- Fairness Metrics: Selecting and balancing mathematical metrics with societal expectations.

- Goal Conflicts: Trade-offs between accuracy, speed, explainability, and security.

- Cultural Adaptation: Respecting local norms and values in global deployments.

- Regulatory Lag: Legislation trailing behind technological innovation.

- Unpredictability of Self-Learning Models: Challenges in controlling and testing autonomous learning systems.

8. Implementation Recommendations

A one-size-fits-all approach to Ethical AI rarely succeeds. Different organizational scales and maturities demand tailored strategies that balance ambition with practicality.

Need expert support implementing AI governance?

Our team at EthicaLogic offers dedicated advisory and auditing services for startups, scaleups, and enterprise innovation units. Explore our proven 8-Phase Governance & Compliance Methodology to see how we help you build sustainable, regulation-ready AI systems.

For Large Enterprises

- Comprehensive AI System Audit: Assess risks and ensure compliance with standards and policies.

- Establish a Cross-Functional Ethics Team (AI Ethics Committee).

- Integrate XAI and Fairness Tools Early in design and development.

- Automated Monitoring: Dashboards and alerts for critical metrics.

- Employee Training: Ongoing workshops, certifications, and internal hackathons.

For Small Businesses and Startups

- Lightweight Risk Audit: Identify top three risks (privacy, bias, security).

- Use Open-Source/Free Tools: Fairlearn, What-If Tool, SHAP.

- MVP Approach: Pilot ethical solutions in early prototypes.

- Appoint an Ethics Lead without creating a heavy governance structure.

- Partner with Consultants and Communities: Share best practices and templates.

- Minimal Documentation: Maintain a simple wiki or checklists for decision-making.

9. Conclusion

As AI continues to reshape industries, embedding ethics into every stage of its lifecycle is no longer optional—it is a strategic imperative. Organizations that proactively integrate ethical governance will build stronger stakeholder trust, mitigate compliance risks, and unlock sustainable growth.