Introduction

By May 2025, the domain of AI governance has encountered a convergence of complex, interrelated challenges requiring systemic and interdisciplinary responses. The rapid acceleration of AI capabilities has been paralleled by a surge in legislative initiatives, intensified demands for algorithmic transparency and fairness, emergent vectors of cybersecurity threats, and heightened scrutiny over environmental sustainability — all occurring against the backdrop of a severe talent shortage in the field.

This article is authored by Viacheslav Gasiunas, a legal strategist and advisor specializing in AI regulation, risk governance, and cross-border compliance. It summarizes key systemic risks shaping the AI landscape in 2025 and offers strategic guidance grounded in regulatory foresight and cross-disciplinary practice.

From regulatory fragmentation to black box opacity and adversarial security, the imperative for trustworthy, explainable, and sustainable AI has never been more urgent.

1. Regulatory Chaos: The Fragmentation of AI Compliance Norms

Regulatory chaos is increasingly evident in the avalanche of AI-related legislative initiatives — ranging from soft-law principles and non-binding declarations to strict statutory requirements enacted at national, regional, and even municipal levels. As a result, companies are forced to adapt simultaneously to dozens of heterogeneous standards, leading to significantly higher compliance costs and prolonged time-to-market for AI-driven products.

This fragmented regulatory puzzle is further exacerbated by the growing number of actors shaping the rules — from national authorities and industry-specific consortiums to international NGOs and private certification bodies, each promoting divergent governance models and oversight expectations.

Why This Becomes Critical in 2025

- Escalation of geopolitical tensions. The rivalry between the United States and China for technological leadership has led to sharply divergent approaches to AI regulation. While China pursues centralized algorithmic oversight and mandatory registration systems, the United States remains fragmented, with state-level regulations that often conflict with federal norms.

- Rising economic and environmental risks. Disparate energy reporting requirements for AI systems across jurisdictions hinder accurate assessment of their carbon footprint. This impedes the development of “green” AI technologies and makes it difficult to compare solutions from different providers.

- Public trust and corporate reputation. Inconsistent legal frameworks reduce the transparency and predictability of regulatory reviews and audits. This increases the likelihood of penalties and reputational damage — especially in high-stakes sectors like finance, where supervisory expectations are rigorous and non-compliance is highly visible.

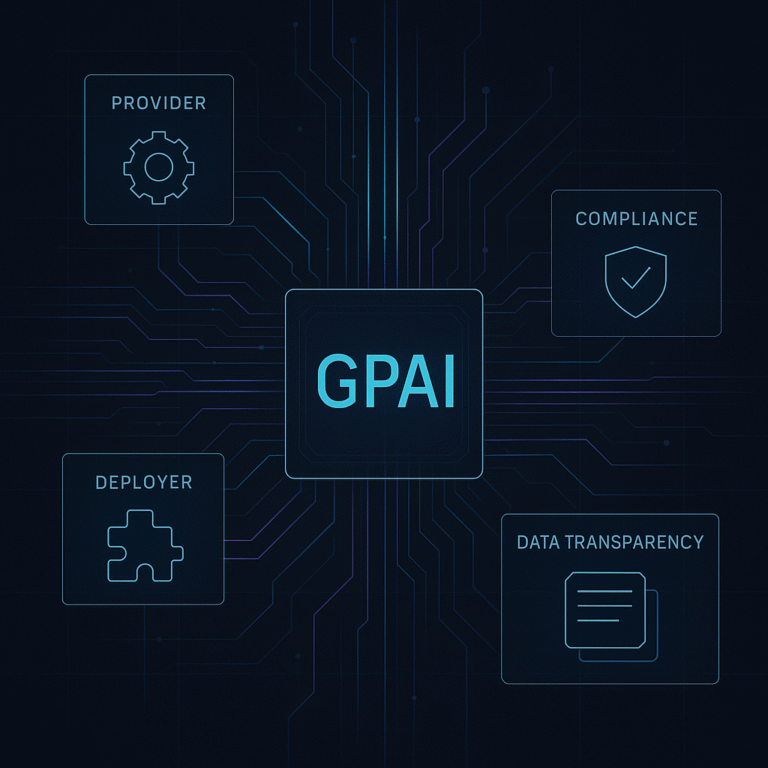

The EU’s Attempt at Harmonization: AI Act and Global Divergence

The European Union, through its recently adopted AI Act , has taken a bold step toward establishing a unified regulatory framework for artificial intelligence. Its risk-based approach classifies AI systems into four distinct categories — from fully prohibited applications (such as social scoring systems) to those permitted under general transparency and ethical principles. This legislation marks a milestone in the evolution of global regulatory thought.

However, major global powers such as the United States, China, and India are not blindly replicating the European model. Instead, they are crafting their own frameworks, each attempting to balance innovation incentives, governance mechanisms, and national interests. While the “Brussels Effect” — wherein EU standards like the GDPR effectively became global norms — has reshaped data protection worldwide, its influence on AI governance remains less certain. Assessing its real impact will require careful monitoring of cross-border transactions, contractual clauses, and emerging case law.

Comparative Overview of AI Regulatory Landscapes

| Region | Key Frameworks / Initiatives | Status (May 2025) | Notable Features | Main Challenges |

|---|---|---|---|---|

| Europe |

– EU AI Act (Regulation 2021/0106) – National AI Sandboxes (Art. 57 AI Act) |

– In force since August 1, 2024 – Phased implementation: high-impact models by Aug 2025; full applicability by 2026 |

– Clear risk classification (prohibited, high-risk, etc.) – Mandatory risk assessments for high-risk systems |

– Varying readiness across Member States – Complex harmonization with national laws |

| United States |

– No federal AI law – State-level initiatives (California, Colorado, Utah, etc.) – “One Big Beautiful Bill” proposes 10-year moratorium on state laws |

– Multiple federal proposals, none enacted – Over 30 state-level laws/resolutions passed by May 2025 |

– Focus on consumer protection and anti-discrimination – Strong lobbying for state-level “AI laboratories” |

– Risk of federal vs. state conflicts – Legal uncertainty due to proposed moratorium |

| China |

– Algorithmic Recommendation Rules (2021) – Deep Synthesis Regulation (2022) – Interim Measures for Generative AI (2024) |

– In effect since 2021–2022 – Interim Measures effective Q1 2025 |

– Centralized algorithm registry – Mandatory labeling of AI-generated content – Strict control of “harmful” information |

– Limited process transparency – Innovation trade-offs due to focus on control |

| Middle East |

– National AI strategies (e.g., UAE 2031) – GCC-level guidance (e.g., digital governance frameworks) |

– Strategies adopted 2021–2023 – Pilot projects in implementation (2024–2025) |

– Investment in smart governance and healthcare – Emphasis on localization and independence |

– Gap between vision and legal execution – Lack of binding regulatory norms |

| South America |

– Brazil AI Bill No. 2338/2023 (passed in Dec 2024) – Regional recommendations and national guidelines |

– Under Senate discussion since March 2025 – Expected to take effect in 2026 |

– Strong emphasis on rights and non-discrimination – Use of social practices as regulatory foundation |

– Uneven development across countries – Limited supervisory resources |

| Other Regions |

– OECD AI Policy Observatory – Global AI Tracking & Guidelines (non-binding) |

– Mostly guidelines and pilot initiatives – No binding laws in most countries |

– Hybrid approaches: soft law to full deregulation – Rise of industry-led self-regulation |

– Regulatory vacuums (notably in Africa and parts of Asia) |

The Fragmentation of Frameworks: Choosing the Right Model

A practical manifestation of regulatory chaos in AI governance is the increasing difficulty faced by companies in selecting an appropriate governance framework for their internal and external AI projects. While widely recognized standards such as the NIST AI RMF, EU AI Act, AIGA AI Governance Framework, ISO/IEC 42001, and the OECD AI Principles provide structured and ethical approaches to risk management, the emergence of additional national and international initiatives — including the Brazilian AI Act, the UK AI Safety Institute Framework, India’s AIForAll Strategy, and the Global AI Foresight Network — adds further complexity and strategic uncertainty.

A comparative analysis of these frameworks, their distinctive features, and potential for practical implementation will be addressed in a forthcoming article.

Implications for Business, Society, and Technology

- Rising operational costs. Organizations are increasingly required to implement adaptable, jurisdiction-specific “compliance-as-code” systems. This significantly increases resource demands and slows down internal R&D cycles.

- Innovation bottlenecks. The diversity and inconsistency of AI regulations across markets delay product launches and raise entry barriers for startups, particularly in cross-border contexts.

- Social risks and distrust. Inconsistent enforcement undermines public confidence in AI technologies and fuels skepticism around their deployment in sensitive domains such as healthcare, legal systems, and social services.

Practical Solutions for Mitigating Regulatory Chaos in AI Governance

-

Centralized monitoring and compliance automation.

Establish a dedicated Regulatory Watch Desk supported by RegTech tools to continuously track legal developments and automatically benchmark internal policies against current requirements. Automated analysis should be supplemented by legal expert review to interpret nuanced or ambiguous provisions. -

Modular, framework-agnostic architecture with “compliance-by-design.”

Develop a core AI governance system based on unified internal policies and extend it through adapters for NIST, the EU AI Act, ISO/IEC 42001, and other standards. Regulatory requirements should be embedded into the design phase of both models and data flows. -

Risk-based audits with optimized resource allocation.

Conduct AI audits proportionally to the system’s risk level: quarterly for high-risk (e.g., medical), biannually for medium-risk, and annually for low-risk systems (e.g., recommender engines). Document outcomes using Model Cards and a centralized Risk Register to support audit-readiness. -

Cross-functional AI Governance Council and regulatory engagement.

Create governance teams that bring together ML engineers, legal compliance experts, cybersecurity officers, and sustainability leaders. Provide continuous training on key frameworks and actively participate in industry alliances and AI regulatory sandboxes. -

Resilience strategy: scenario planning and risk insurance.

Design a Regulatory Resilience Plan that includes tabletop simulations of major regulatory shifts. Complement this with Tech E&O policies that include AI-specific riders to ensure financial and procedural readiness in case of sudden legislative tightening.

2. The Black Box Problem: Bias and Explainability in AI Systems

Problem Description

Modern AI systems — particularly those based on large neural networks — frequently operate as black boxes, producing outputs that are not readily interpretable by developers or users. This opacity introduces two major categories of risk:

- Bias. AI models can replicate and even amplify historical imbalances embedded in their training data — including social, gender, ethnic, or economic disparities — without providing mechanisms for early detection or correction.

- Lack of explainability. When incidents occur — such as discriminatory hiring, credit scoring, or clinical decision-making — it becomes difficult to identify which features influenced the model’s output and whether legal or ethical boundaries have been violated.

Root Causes of the Black Box Problem in AI Governance (2025)

-

Developer subjectivity.

During model development, engineers and researchers unintentionally embed personal assumptions and beliefs into algorithmic architecture and feature selection. This influence can manifest through subtle data filtering or tuning hyperparameters that reinforce specific patterns in the data. -

Low-quality and non-representative data.

Training datasets with gaps, mislabeled examples, or skewed demographics systematically introduce bias. Moreover, data quality controls are often deprioritized in favor of rapid development cycles and time-to-market pressure. -

Model complexity and opacity.

Deep neural networks and large language models consist of billions of parameters, making it virtually impossible to fully understand their internal logic. Modern explainability tools like LIME and SHAP offer approximations — but their outputs are sometimes contradictory and unreliable. -

Tension between business goals and ethical requirements.

The push for faster deployment and KPI optimization often relegates explainability and fairness to secondary concerns, further exacerbating the black box effect in production systems. -

Fragmentation of standards and inconsistent frameworks.

Differences between governance models (NIST RMF, EU AI Act, ISO/IEC 42001, OECD AI Principles) and their partial or delayed adoption create gaps in bias auditing and explainability expectations. Transitioning between regulatory regimes without re-engineering internal processes leads to compliance blind spots and regulatory dead zones.

Regulatory Landscape: Global Norms on Explainability and Bias

European Union

EU AI Act (Regulation 2024/1689): Starting February 2, 2025, high-risk systems are subject to mandatory explainability and Algorithmic Impact Assessments (AIA). Articles 13–15 require developers to provide “sufficient justification” for every significant system output and publish Model Cards indicating limitations and associated risks.

United States

EEOC & State Attorneys General: Despite the removal of the EEOC’s official AI guidance in January 2025 (taken offline on January 27), state consumer protection agencies and Attorneys General in California, Massachusetts, Oregon, New Jersey, and Texas continue to use anti-discrimination and consumer protection laws to address algorithmic bias in hiring and credit decisions.

China

Interim Measures for Generative AI (2023): Effective Q1 2025, these measures require AI service providers to minimize discrimination based on gender, age, and ethnicity, and to disclose key principles of model functionality via public transparency reports.

OECD and International Guidelines

OECD AI Principles (updated 2024): Principle P1.3 (“Transparency & Explainability”) recommends the responsible disclosure of system capabilities and limitations. Developers are encouraged to conduct open testing and publish detailed Protocol Reports to support trust and accountability.

Best Practices: Practical Explainability Guidelines for 2025

-

Integrate XAI tools.

Apply SHAP and LIME for key high-risk models, generating explainability reports automatically at each release stage. -

Explainability by Design.

Create basic Model Cards describing purpose and limitations, paired with a simple AIA checklist. Make them mandatory prior to deployment. -

Risk-based prioritization.

Segment projects by risk level and scale explainability efforts based on the model’s potential impact. -

Governance and ownership.

Establish a cross-functional AI governance council with designated explainability and fairness owners, and adopt a shift-left strategy to embed these requirements early. -

Culture and metrics.

Train teams in XAI fundamentals, track key explainability indicators in dashboards, and tie them to corporate KPIs.

3. AI Security: Emerging Threats and Defensive Strategies Against AI Abuse in 2025

The artificial intelligence security landscape in 2025 presents unprecedented challenges as organizations rapidly deploy sophisticated AI systems while facing increasingly sophisticated threat actors. This report examines the critical security vulnerabilities emerging from widespread AI adoption, the underlying causes of these risks, and evidence-based defensive strategies for organizations seeking to maintain secure and responsible AI deployments.

With over 25% of executives reporting deepfake incidents targeting their organizations and fraud losses projected to reach $40 billion by 2027, AI security has transitioned from a theoretical concern to an operational imperative requiring immediate organizational attention and structured defensive measures.

Problem Description

The AI security threat landscape in 2025 encompasses a wide range of attack vectors that exploit both the technical architecture of machine learning systems and their integration into organizational environments. These risks represent a convergence of classic cybersecurity threats with vulnerabilities specific to AI technologies, creating new defensive challenges for governance, compliance, and security teams.

Prompt injection has emerged as a critical concern for organizations deploying large language models and generative AI systems. Malicious actors exploit natural language inputs to bypass safeguards, extract confidential information, manipulate outputs, or trigger unintended behaviors. This stems from the architectural limitations of current LLMs, which struggle to differentiate between developer instructions and crafted user prompts—making defense difficult without redesigning system logic.

Adversarial attacks continue to evolve, encompassing both black-box and white-box scenarios where model inputs are intentionally modified to produce flawed outputs. These manipulations undermine high-accuracy applications such as fraud detection, medical diagnostics, and autonomous decision-making by subtly exploiting the model’s statistical assumptions.

Model vulnerabilities are amplified by the widespread use of open-source architectures. Downloading pre-trained models from public repositories without thorough validation increases the risk of hidden payloads, backdoors, or misconfigurations. Current scanning tools lack the sophistication needed to detect embedded threats, leaving organizations exposed to attacks initiated at the model level.

Synthetic identity abuse has intensified due to rapid advancements in deepfake technology. AI-generated audio, video, and text content are now being used to impersonate individuals, forge documents, and undermine digital trust systems. These attacks erode confidence in authentication mechanisms and present systemic risks for sectors relying on biometric verification and digital content validation.

Agentic AI risks are emerging as enterprises integrate autonomous agents into workflows. These agents can act without direct human oversight, and if compromised, may propagate malicious actions across interconnected systems. As agentic AI blurs the line between automation and autonomy, it introduces novel threat surfaces for phishing, data exfiltration, and logic corruption.

Causes and Real-World Examples of AI Security Vulnerabilities

The root causes of AI security vulnerabilities in 2025 stem from systemic flaws in development practices, inadequate integration of security mechanisms, and the inherent complexity of modern machine learning systems. These underlying issues create fertile ground for successful exploitation, while simultaneously limiting organizational defense capabilities.

-

Model opacity (“black box” effect):

The lack of interpretability in advanced AI systems remains a fundamental obstacle to implementing robust security protocols. As previously discussed in this article, opaque architectures make it difficult to detect anomalies, predict behavior, or verify safeguards. -

Insufficient red teaming and adversarial testing:

Many organizations lack structured red teaming programs tailored to AI-specific threats. Traditional security testing frameworks fail to address vulnerabilities such as prompt injection, adversarial inputs, and model inversion. The absence of AI-savvy personnel and mature testing protocols leaves systems exposed to sophisticated attack vectors. -

Low threat awareness in development teams:

Developers and data scientists often deploy AI models without sufficient training in cybersecurity principles. This gap results in insecure architectures that are vulnerable to manipulation, extraction, and input-based exploitation. Without dedicated threat modeling, key risks remain undetected during the development lifecycle. -

Insufficient AI-specific DevSecOps integration:

Many CI/CD pipelines lack automated security scanning for AI artifacts. This leads to insecure or even malicious models being deployed into production without proper validation. The absence of explainability, bias detection, or dependency checking in release workflows creates hidden security gaps.

Real-world incidents illustrate the operational impact of these vulnerabilities. In 2024, a wave of high-profile deepfake fraud cases led to multimillion-dollar losses, including incidents where employees were tricked into transferring funds based on AI-generated voice and video content. Prompt injection attacks have also been used to compromise live AI systems, revealing sensitive data through carefully crafted queries and bypassing guardrails. These are no longer theoretical weaknesses but measurable business risks.

The sophistication and scale of AI-enabled attacks have grown rapidly. Threat actors now use AI to enhance traditional penetration testing and exploit research, including the deployment of semi-autonomous offensive models. This signals a need for defense strategies that can counter adversaries augmented by AI and equipped with scalable attack capabilities.

Causes of AI Security Vulnerabilities

AI security risks in 2025 arise from systemic flaws in development culture, insufficient operational safeguards, and the inherent complexity of modern machine learning systems. These root causes create exploitable weaknesses while constraining organizational detection and response capabilities.

Model opacity and lack of transparency.

Many AI models function as black boxes, limiting the ability to trace outputs, understand decision-making processes, detect anomalies, or audit internal logic. This lack of visibility complicates efforts to validate model integrity and explain the root causes of vulnerabilities or biases—especially when working with externally sourced or open-weight architectures like many advanced Large Language Models (LLMs).

Inadequate red teaming and adversarial testing.

Traditional security testing frameworks and teams are often not equipped with the specialized skills, methodologies, or tools to surface AI-specific threats such as prompt injection, adversarial example generation, or model inversion. Without dedicated processes, skilled personnel, and appropriate tooling, organizations lack proactive threat simulation and fail to identify vulnerabilities before deployment.

Low security awareness in development teams.

AI developers and data scientists, often prioritizing model performance and functionality, are frequently unfamiliar with cybersecurity principles specific to AI systems. This knowledge gap leads to insecure design decisions, such as the absence of robust input sanitization and output encoding, and poor understanding of attack surfaces related to model behavior, data exposure, and potential model manipulation.

Lack of secure integration into CI/CD pipelines.

Current DevOps and MLOps workflows rarely include robust AI-specific security validation steps. Missing scans for vulnerabilities in model code and dependencies, inadequate testing against known AI attack vectors (e.g., data poisoning, adversarial inputs), or insufficient security for model artifacts (weights, configurations) allow flawed or compromised models to reach production without scrutiny.

Escalation of attacker capabilities through AI tools.

As threat actors adopt AI-assisted methods to automate attack planning, vulnerability discovery, and payload development, traditional defensive strategies reliant on signature-based detection or manual threat hunting become increasingly insufficient. Without adaptation to these AI-enabled threats, organizations face widening exposure and a shrinking response window across all critical AI touchpoints.

Practical Recommendations: Building a Secure AI Deployment Strategy

-

Institutionalize AI-Focused Red Teaming and Adversarial Testing.

Proactively simulate attacks such as prompt injection, data poisoning, model inversion, and extraction by forming dedicated AI red teams or partnering with specialized third parties. Regular adversarial exercises—based on frameworks like MITRE ATLAS—help uncover security blind spots before deployment. Feed successful attack patterns back into retraining loops to harden models over time. -

Embed Security into DevSecOps and MLOps Pipelines.

Integrate AI-specific security validations directly into CI/CD workflows to prevent the promotion of vulnerable or malicious models. Implement automated scans for compromised artifacts, dependency vulnerabilities (CVEs), and AI-specific supply chain risks. Require security sign-off before each model update reaches production to maintain a compliant release process. -

Implement Risk-Based Governance and Policy Frameworks.

Develop clear internal policies aligned with leading frameworks such as NIST AI RMF, ISO/IEC 42006, and the EU AI Act. Classify AI systems by risk level (e.g., external-facing, decision-making vs. internal low-impact tools) and apply proportionate security controls across the lifecycle—from acquisition and training to deployment and monitoring. -

Integrate Explainability and Immutable Audit Logging.

For every high-risk AI decision, generate explainability artifacts using tools like SHAP or LIME and store them alongside metadata in your Model Registry. Enable streaming of input/output logs, configuration changes, and model activity into a central SIEM to support forensic investigations, bias detection, and audit-readiness.

4. Green AI and the Search for Global Governance Standards

Two seemingly distinct but closely interlinked challenges are shaping the future of AI governance in 2025: the rapid growth in AI-related energy consumption and the lack of global coordination in establishing common governance standards. As AI models become larger and more resource-intensive, sustainability concerns have moved to the forefront. At the same time, fragmented national and industry-specific frameworks hinder the creation of a unified, ethical, and environmentally responsible AI ecosystem.

The Energy Appetite of Artificial Intelligence

Training and operating large-scale AI models requires enormous amounts of electricity. As of 2025, industry estimates suggest that the total energy consumption associated with AI workloads is now comparable to that of entire nations. This creates not only a financial burden but also a significant environmental challenge, particularly when considering the carbon footprint of energy production.

Major technology firms are investing billions into their own energy infrastructure—including nuclear energy initiatives—to meet the soaring demands of their AI operations. These investments highlight both the scale of AI growth and the lack of readiness in existing energy grids to sustainably support future models.

Modern AI governance systems must now incorporate environmental impact assessments and actively promote the development of Green AI: more energy-efficient algorithms, hardware-optimized deployment strategies, and responsible operational practices. Sustainability is no longer optional—it is a strategic imperative for long-term AI resilience and trust.

The Challenge of Global Standardization in AI Governance

Despite the efforts of international bodies such as the UN and OECD, no universally accepted global framework for AI governance currently exists. Countries and regions continue to advance their own regulatory models, deepening the fragmentation discussed earlier in this report. This divergence makes it increasingly difficult to establish consistent practices and principles across borders.

Lack of harmonization impedes international collaboration, hinders cross-border data sharing and innovation, and raises the risk of a regulatory “race to the bottom”—where companies gravitate toward jurisdictions with the weakest oversight to reduce compliance burdens.

There is a growing need for compromise and the development of flexible yet globally recognized frameworks that can guide responsible AI development and deployment. In particular, international standards could include requirements for energy efficiency and environmental impact assessments, helping to unify global efforts around sustainable AI practices.

Recommendations for Implementing Sustainable AI Practices

-

Align with existing standards.

Adopt ISO/IEC 42001 (AI Management Systems), the first international framework to include principles for environmental responsibility and long-term sustainability in AI governance. -

Introduce Green AI metrics into KPIs.

Integrate energy efficiency and environmental impact into key performance indicators (KPIs) for AI projects to promote measurable accountability and carbon-aware model development. -

Support open and decentralized innovation.

Participate in Open Data and Federated Learning initiatives to reduce redundant training cycles and lower the overall compute burden associated with model development.

5. AI Governance Talent Crisis: Workforce Gaps and Strategic Responses for 2025

As regulatory frameworks and AI technologies evolve at unprecedented speed, the most persistent barrier to effective and scalable AI governance in 2025 is not technical—it’s human. A severe shortage of qualified professionals capable of navigating the complex landscape of AI risk, ethics, and compliance is affecting organizations of all sizes and sectors. This workforce gap threatens to slow down responsible innovation and increase exposure to uncontrolled AI deployment.

Scope of the AI Governance Talent Shortage

Recent labor market analyses show a growing mismatch between organizational demand and available expertise:

- Severe supply-demand gap: According to LinkedIn Talent Insights (Q3 2025), global demand for AI governance professionals exceeds supply by 45–60%, particularly in AI development hubs such as North America, Western Europe, and Asia-Pacific.

- Shortage of hybrid professionals: The most acute deficit is among experts who combine AI/ML fluency with regulatory, legal, ethical, or compliance knowledge. These roles are essential for implementing policies and integrating them into real-world MLOps workflows.

- High-demand roles: Positions such as AI Governance Manager, Ethics Officer, Compliance Specialist, and AI Risk Auditor remain difficult to fill, even as they become mission-critical across industries.

- Gap between academic output and applied skills: While academic programs proliferate, graduates often lack practical, interdisciplinary experience. Employers increasingly seek candidates with proven project experience rather than purely theoretical backgrounds.

Why the Talent Gap Is Widening

- Technology-regulation velocity mismatch: The pace of innovation and new AI legislation outstrips universities’ and training systems’ ability to update curricula.

- High qualification thresholds: Effective AI governance requires a blend of technical, legal, ethical, and strategic capabilities, narrowing the qualified talent pool.

- Talent competition: Large tech firms and financial institutions offer top salaries and benefits, making it hard for SMEs and public sector entities to compete.

- Low awareness of AI governance as a career path: Professionals in cybersecurity, data governance, or legal compliance are often unaware of the field’s growth, relevance, or requirements.

Strategic Responses to the AI Governance Talent Shortage

- Expand interdisciplinary education: Universities and business schools must launch master’s programs that combine AI, ethics, law, and risk management. Partnerships with industry should ensure curriculum relevance.

- Promote accessible online training: Platforms like Coursera, edX, and Udacity should offer high-quality AI governance specializations for professionals in adjacent fields.

- Invest in workforce retraining: Corporations should build internal academies to retrain staff from adjacent areas such as data s

Conclusion: AI Governance Is a Marathon, Not a Sprint

The landscape of AI governance in 2025 is defined by complexity, speed, and convergence of risks. Regulatory uncertainty, technical opacity, ethical tensions, and talent shortages all demand holistic, adaptive responses that evolve alongside technology itself.

These challenges should not be viewed as barriers, but as catalysts for innovation in how we govern, deploy, and scale artificial intelligence. Effective AI governance requires not only risk frameworks and compliance tools, but also a mindset rooted in systems thinking, cross-functional collaboration, and continuous learning.

Organizations that invest in building flexible, resilient AI governance infrastructures will be better positioned to reduce liability, earn stakeholder trust, and unlock the full potential of AI-driven transformation. Governance becomes not just a defensive shield—but a strategic advantage.

Above all, progress in AI governance depends on collective action. Developers, policymakers, researchers, legal experts, educators, and business leaders each have a role to play in ensuring that AI serves the public good. The road ahead is long, but the opportunity is historic.